Learning how AI works on a deeper level has always been in my bucket list. I found this amazing video by Andrej Karpathy which walks you through the process of creating an autograd engine first and then move on to create a very basic Neural Network. So here is my experience following the video.

Autograd Engine

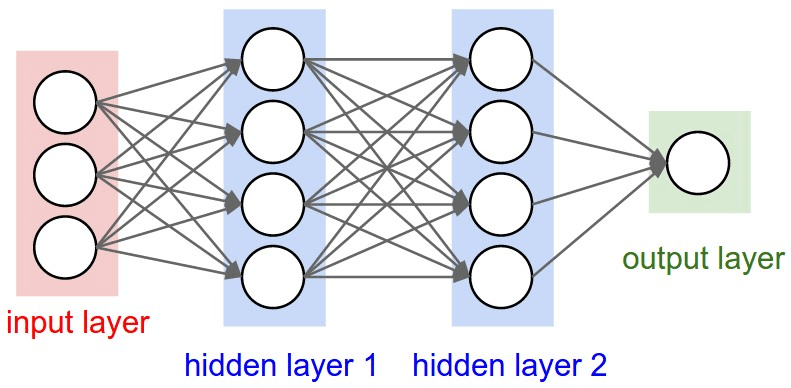

A Neural Network is basically a graph. once we give an input, the neural network returns an output. Each of these neurons have certain parameters which impacts the output directly. We train neural networks to output a desired value by tweaking these parameters in neurons. But how can we derive the parameter values that could cause the neural network to output the desired value?.

Turns out, old friend calculus comes to help here. We can approximate the impact of a certain parameter on the output of the Neural Network, by calculating the gradient of the parameter with respect to the output.

Autograd engine helps us to build a Neural Network (modeled as a graph), and easily do backpropagation to calculate the gradients of each parameter. Hence the name Autograd Engine.

Tiny Grad

Tiny grad is the engine I build. This is a very basic Auto Grad engine which

- Supports implementing Simple Neural networks

- Only works with scalaer inputs

- Supports backpropagating through the neural network and calculating gradient values for each parameter

I did a tiny experiment with tinygrad, where I created a simple neural netowrk and trained it to predict a given input. I was simply playing with a set of numbers, but it was quite fun. You can find my jupyter notebook below,

Micrograd

The tinygrad engine is mostly inspired by Andrej Karpathi’s Micrograd, Autograd Engine.